I am a Research Associate working with Prof. M. Hanmandlu, building practical, human-centered robotic systems. My focus is at the meeting point of learning and perception. The aim is simple: help robots behave reliably around people, stay steady under noise and latency, and work in everyday environments.

Earlier, at the I3D Lab at the Indian Institute of Science (IISc), Bangalore with Prof. Pradipta Biswas, I worked on assistive human–robot interaction and designed the controller for an autonomous aircraft taxiing prototype.

I received my Bachelor’s degree in Electronics and Communication Engineering from RIT, Kottayam, graduating with distinction in general scholarship program. As an undergraduate researcher in the Centre for Advanced Signal Processing (CASP lab) with Dr. Manju Manuel, I worked on FPGA design and implementation. For details on my current directions, see my Research Statement (Feb 2025).

* denotes equal contribution.

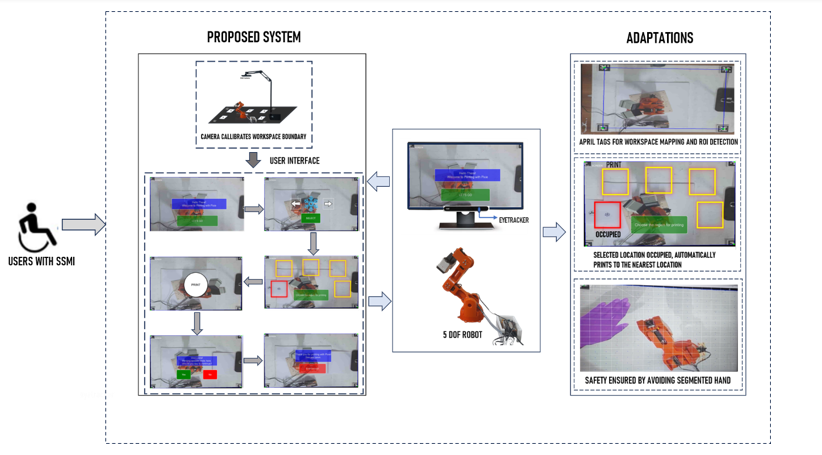

2024Eye-Gaze-Enabled Assistive Robotic Stamp Printing System for Individuals with Severe Speech and Motor Impairment

Anujith Muraleedharan, Anamika J H, Himanshu Vishwakarma, Kudrat Kashyap, Pradipta Biswas

Proceedings of the 29th International Conference on Intelligent User Interfaces

ABS

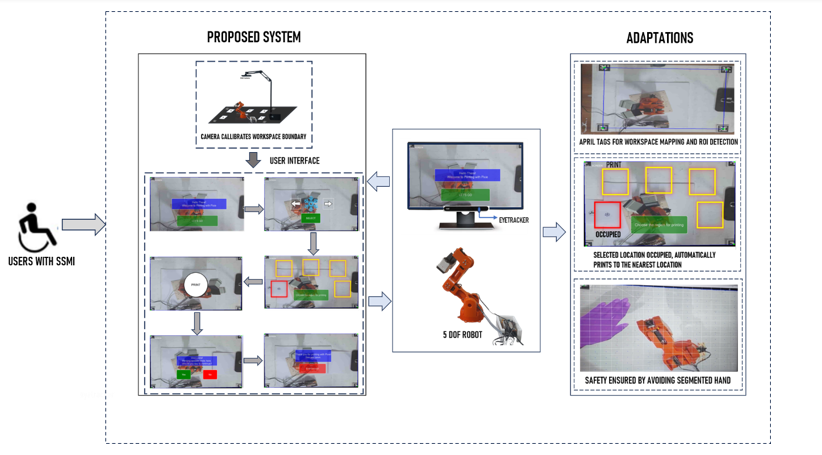

Robotics is a trailblazing technology that has found extensive applications in the field of assistive aids for individuals with severe speech and motor impairment (SSMI). This article describes the design and development of an eye gaze-controlled user interface to manipulate the robotic arm. User studies were reported to engage users through eye gaze input to select stamps from the two designs and select the stamping location on cards using three designated boxes present in the User Interface. The entire process, from stamp selection to stamping location selection, is controlled by eye movements. The user interface contains the print button to initiate the robotic arm that enables the user to independently create personalized stamped cards. Extensive user interface trials revealed that individuals with severe speech and motor impairment showed improvements with a 33.2% reduction in the average time taken and a 42.8% reduction in the standard deviation for the completion of the task. This suggests the effectiveness and potential to enhance the autonomy and creativity of individuals with SSMI, contributing to the development of inclusive assistive technologies.

PAPER WEBSITE

2023Developing a Computer Vision based system for Autonomous Taxiing of Aircraft

Prashant Gaikwad, Abhishek Mukhopadhyay, Anujith Muraleedharan, Mukund Mitra, Pradipta Biswas

AVIATION Journal Vol 27 No 4 (2023)

ABS

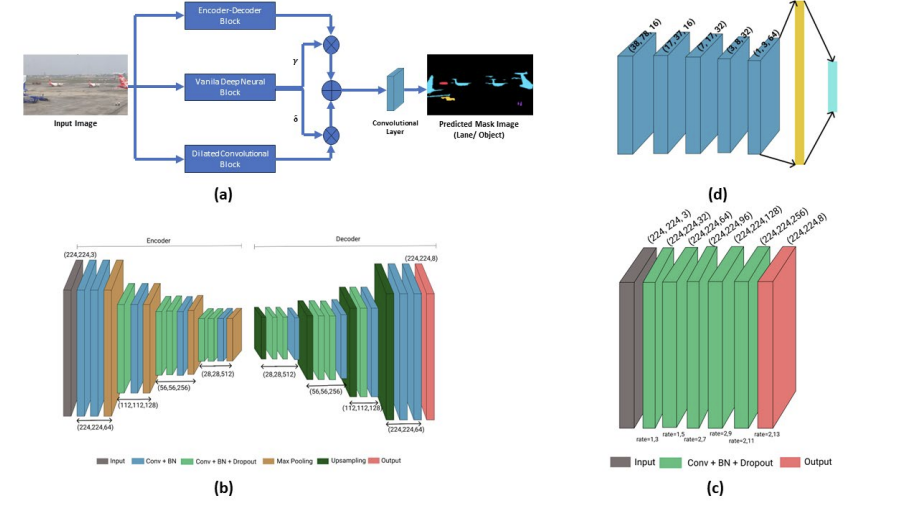

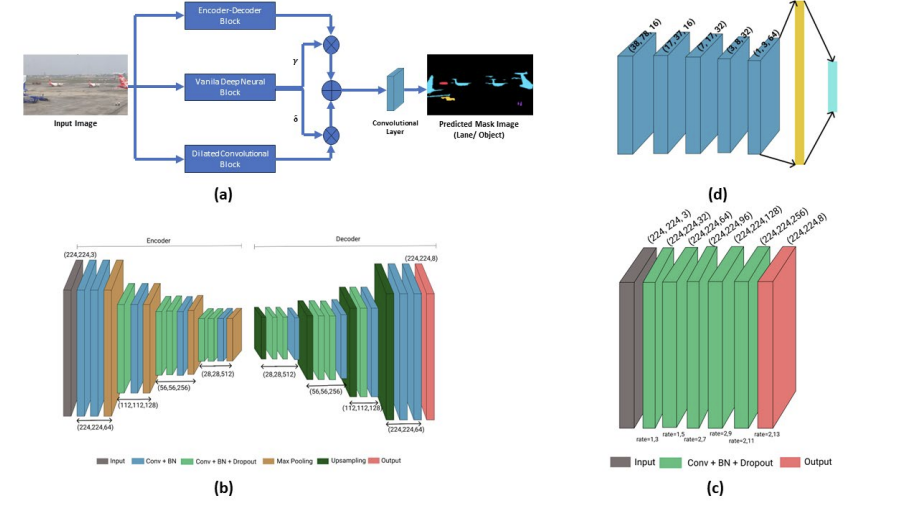

Authors of this paper propose a computer vision based autonomous system for the taxiing of an aircraft in the real world. The system integrates both lane detection and collision detection and avoidance models. The lane detection component employs a segmentation model consisting of two parallel architectures. An airport dataset is proposed, and the collision detection model is evaluated with it to avoid collision with any ground vehicle. The lane detection model identifies the aircraft’s path and transmits control signals to the steer-control algorithm. The steer-control algorithm, in turn, utilizes a controller to guide the aircraft along the central line with 0.013 cm resolution. To determine the most effective controller, a comparative analysis is conducted, ultimately highlighting the Linear Quadratic Regulator (LQR) as the superior choice, boasting an average deviation of 0.26 cm from the central line. In parallel, the collision detection model is also compared with other state-of-the-art models on the same dataset and proved its superiority. A detailed study is conducted in different lighting conditions to prove the efficacy of the proposed system. It is observed that lane detection and collision avoidance modules achieve true positive rates of 92.59% and 85.19%, respectively.

PAPER WEBSITE